|

WWW2009 trip report |

|

with the advent of Web 2.0, social computing has emerged as one of the hot research topics recently. social computing involves the investigation of collective intelligence by using computational techniques such as machine learning, data mining, natural language processing, etc. social computing could also be described as a combination of human intelligence and computer power. a very simple example of a human/machine interaction is a process known as "captcha" (computer automated process to tell computer and human appart) - a combined process involving a computer program and a human being in order to make sure that the input is provided by a human being rather than another computer program (robot).

while in the simple example above the computer performs most of the work, there are more complex interactions where the human being does the important part of the job. despite of the huge progress in computer based processing, there are still areas where human beings are much more capable than computers, for example if it comes to recognize and describe the content of images. companies like Google face the problem of adding meaningful meta data to their huge collection of images. today, it is easy to find text related to a particular word or expression, but it is almost impossible to find images just based on the image itself. however, it is possible to find images if there is meaningful meta data available. because machines are not (yet) capable to recognize the content of an image, humans have to provide descriptive information, known as meta data. due to the huge collection of images, it would cost Google a tremendous amount of time and money to add meta data if they had to hire people to describe all the pictures stored in their search engine. therefore they came up with an interesting idea: let the collective do the job. however, they first had to motivate people to participate and second they had to make sure, people add good attributes which describe the content of the image in the best possible way. there are various ways to motivate people, namely money and challenge. the later is particularly well suited for the Internet community. therefore Google invented a so called "game with a purpose" (gwap) named "image labeler" (see images.google.com/imagelabeler/). the idea is simple: on a dedicated website, they show randomly chosen images to two players, asking them to describe the image as good as possible with various labels. the players win points if the labels match. matching labels are assumed "good" labels, describing the image accurately. with this game, Google can build up a database with meta data for all the stored pictures with almost no effort.

other areas of interest to social computing are so called social networking sites such as blogger (blogs - online "dairies"), digg (news, images, videos), facebook (online community), flickr (shared photos), foxytunes (music), knol.google.com (shared collaborative knowledge), myspace (online community), QQ (online community in china), twitter (instant shared news), Wikipedia (shared collaborative knowledge) and YouTube (shared videos).

from search to social search: today, it is easy to find facts, but it is very difficult to find opinions or recommendations. we can easily find restaurants in a particular town, but sometimes we need personalized search to answer queries like: "where is the best place in this town to eat pasta ?" it would be helpful to add score to links such as "recommended", "i like", "i dislike" or "stay away from". technics and algorithms have to be developed to support search engines with "social data" such as recommendations.

|

| cesare pautasso and erik wilde |

recent technology trends in Web services indicate that a solution eliminating the perceived complexity of the WS-* standard technology stack may be in sight: advocates of REpresentational State Transfer (REST) have come to believe that their ideas explaining why the World Wide Web works are just as applicable to solve enterprise application integration problems and to radically simplify the plumbing required to implement a Service-Oriented Architecture (SOA). in this tutorial, cesare and erik gave an introduction to the REST architectural style as the foundation for RESTful Web services. the tutorial started from the basic design principles of REST and how they are applied to service oriented computing. it then dove into the details of the REST design methodologies and ended with a comprehensive comparison between RESTful and "traditional" Web services.

REpresentational State Transfer (REST) is defined as an architectural style, which means, it is not a concrete systems architecture, but instead a set of constraints that are applied when designing a systems architecture. they explained how the Web is one such systems architecture that implements REST. in particular, cesare and erik explored the mechanisms of the Uniform Resource Identifiers (URI), the HyperText Transfer Protocol (HTTP), media types and markup languages such as the HyperText Markup Language (HTML) and the eXtensible Markup Language (XML). they also introduce Atom and the Atom Publishing Protocol (AtomPub) as two established ways on how RESTful services are already provided and used on today's Web.

RESTful services represent objects in a human readable way (HTML) and in a machine readable way (XML).

the five design constraints for RESTful services are:

cesare and erik suggested the following design methodology:

Web services (WS) versus RESTful services:

WS are based on HTTP, XML and SOAP and use WSDL (Web Service Description Language) to describe the available services. RESTful services are based on HTTP, JSON (JavaScript Object Notation), XML, RSS or Atom and may use WADL (Web Application Description Language) to describe the available services.

for further details, see dret.net/netdret/docs/soa-rest-www2009/intro.

when we approached the conference building this morning, it felt as if we were part of an action movie: there was security everywhere and at the entrance, we had to pass a security check like at the airport. the reason for this was the presence of the princes of asturias, letizia ortiz rocasolano and her husband prince of asturias, felipe de borbon y grecia during the opening ceremony.

the opening ceremony started with a panel discussion to celebrate the 20th anniversary of the Web. wendy hall acted as chair, the panelists included mike shaver (vice president engineering at mozilla corporation, *17-feb-1877, canada), dale dougherty (co-founder o'reilly media), vinton cerf (google, *23-jun-1943, USA), robert cailliau (cern, *26-jan-1947, belgium) and tim berners-lee (cern/w3c, *8-jun-1955, UK).

after the panel, prince felipe presented his welcome notice while the photographers gathered around his very attractive wife and illuminated the stage with their photoflashes. unfortunately, the princes was nothing but decoration - she didn't say a single word. i found this a rather embarrassing situation ...

this was followed by the keynote by tim berners-lee titled "reflecting on the last 20 years and looking forward to the next 20". in march 1989, tim berners-lee presented his proposal titled "hypertext and CERN" to his boss which produced almost no reaction. a year later, tbl started circulating the same proposal again and this time, he got permission to start working on this project - a project, which started a thing today known as the "World Wide Web". the Web has changed many people's life during the last two decade, including mine. in spring 1994, i was hired by the swiss federal institute of technology as a system manager for their central VMS systems. in may of the same year, they sent me to WWW1 and when i came back from the conference, i set up our first website. later, i started managing webservers and i began to produce and maintain content. today, i'm what we call "technical webmaster", administering a number of webservers, while others became responsible for the content. however, the Web is not only omnipresent during office hours, but also during leisure time. and this is not only true for myself, but also for my wife and even more for our three kids. the computer is always on and the browser window is always open. no doubt, the Web has changed my life completely and i'm sure the same is true for many of us. but what will the future bring ? tbl said: "Web 3.0 is Web 2.0 with the silos broken down". does this mean the "social websites" are silos and they will mash up in the future ? in my opinion, the keyfactor of Web 1.0's success was its simplicity and the fact that failure is an inherit part of the concept (the famous 404 - "document not found"). the versions after 1.0 are more complicated and therefore have more troubles to become widely accepted. i certainly hope i one day have the chance to look back to what we call today the next 20 years and to see, how the Web evolved in its decades three and four. regardless of the version number, it will be very exciting, i'm sure.

there is more information available about the history of the Web at CERN and at W3C. here is also a copy of tbl's first proposal - certainly worth reading it.

in the afternoon, i attended talks about Web security. talks about security always have a sad momentum, because presenters tend to list all the bad things that can happen to us. there was no difference during this afternoon. one session was about social networks and how easy they can be abused to gather personal information. for example, they invited randomly chosen users of a social network to become their friend. 60% of the users accepted the invitation and therefore gave access to their private information to a complete stranger. we certainly need to educate people around us - and especially or kids - to make sure they keep their privacy in mind. once information has been publicly published on the Web, it is almost impossible to revoke it. there are so many caches, search engines and automated procedures to harvest all kind of information from the Web, that we cannot erase it from all the different data stores. on the other hand, we also have to make sure that our kids do not trust all the information provided on Web pages or emails. even if multiple documents on different sites provide the same information, it does not necessarily have to be true - all the authors may just have copied the information from the famous wikipedia. and if someone has posted false data in wikipedia, either by accident or intentionally, all the other Web pages are incorrect as well.

the day begun with the keynote titled "the continuing metamorphosis of the Web" by alfred z. spector, a vice president at Google. it was not too surprising that his talk focused around search. in his view, there are four key areas in search: text, voice, image and video. i liked alfred spector's vision, that voice search will become the killer application for voice recognition. for me, it would be a dream come true if we one day could lookup information through our mobile phone using our voice - or talk to our computer or get directions from our navigation device controlled by voice commands.

the keynote was followed by a panel about Web science. tim berners-lee (W3C), mike brodie (Verizon US) and ricardo baeza-yates (Yahoo! Research) discussed questions such as "what do we need to understand about the Web that we don't understand ?" there are many controversial opinions about what Web science is all about and if it is needed at all. advocates of Web science suggest that it has to be an interdisciplinary science and claim there is a need to scientifically analyze not only the technical aspects such as computing, networking and math, but also economical, social and cultural implications. the panelists talked about the unexpected success of social networks such as flickr and the need to analyze, why they are so successful. they also identified trust versus privacy as the biggest challenge.

in the afternoon, i visited a number of talks related to social networks and privacy. often, information available from social networks is used for scientific work. for example, there was a paper about analyzing and automatically categorizing photos using various text and image processing algorithms. they downloaded more than 60 millions photos from flickr which served as a dataset for their work. through statistical analysis, they found some interesting facts. according to this

paper, the seven most photographed landmarks on our planet are in this order: eiffel tower (paris), trafalgar square (london), tate modern (london), big ben (london), notre dame (paris), london eye (london), empire state building (new york). more precisely, i should rather state: these are the most published pictures in flickr. of course, the focus of this paper is not really to rank the most popular landmarks, but to find procedures to automatically cluster photos of the same "thing" (see

www.cs.cornell.edu/~crandall/photomap/ for details).

however, people publishing personal information on the Web including photos, videos, reports etc. should be aware that their information can and will be used for all kinds of tasks. our information will not only be consumed by our friends and interested visitors as we may have intended, but it may also be used for all kinds of (scientific) work. it is important to understand

that there is only limited privacy on the Web. and once information is or was publicly available, it is almost impossible to delete it from the Web. one may remove the content from its original location, but there are most likely many copies stored in caches of search engines and in archives such as the Internet archive. therefore is is extremely important to think twice before publishing personal information particularly in social networks sites, because

they may either accidentally or by intention change their access policies and make private information publicly available. even if this is done only for a short period, the damage is most likely irreversible.

the last day of the conference started with the keynote by pablo rodriguez (scientific director for Internet at telefonica) titled "Web infrastructure for the 21st century". he mentioned the "three waves of networking": telephony was introduced in 1930, computer networks started in 1960 and the Web kicked off the third wave in 1990. he stated that the amount of content on the Web will increase by the factor of six from 2006 until 2010. according to pablo rodriguez is the first attempt to deal with this additional load to set up more caches. his speech was followed by the keynote titled "mining the Web 2.0 for better search" by ricardo baeza-yates from yahoo! he provided a look behind the scene at yahoo! research regarding ideas and attempts to improve search related to social networks also known as the "wisdom of the crowd".

for the rest of the day, i attended the so called "W3C social web camp". a social web working group is about to be established. the 4 major issues are:

there where a number of short presentations about social networks. some about how to analyze social data and how to find relations and what they could mean, others about privacy and how to protect personal data in social networks. see www.w3.org/2009/04/w3c-track.html#socialweb for further information.

the conference ended with the traditional closing ceremony which included the presentation of various award winners. the next Web conference will be held in april 2010 in raleigh, north carolina, USA, see WWW2010.

you may also read my daily blog entries which i posted while i was in madrid (posts are available in german only):

please check out this other trip report:

|

| roberto (UZH) and reto (ETHZ) |

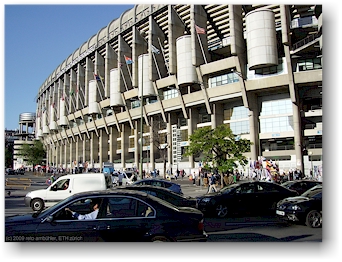

on the evening of april 22, roberto and i visited the football game real madrid against getafe at the world famous santiago bernabeu stadium. even if getafe was only on position 16 of the current standings, the stadium with a capacity of 80000 was almost sold out. real madrid won the game thanks to a lucky punch in the overtime.

|

|

| real madrid's home stadium: santiago bernabeu | |

one evening, i strolled from the conference center down the avenida de la capital de españa to the big roundabout with a tall statue of juan carlos teresa silvestre alfonso - also known as don juan de borbón y battenberg. there are many fancy buildings along this avenue. i could not resist to take a few picture. i also spent some time in the beautiful parque juan carlos I, a quiet and peaceful place with lots of places to relax and plenty of artificial water features to watch.

the series of Web conferences started in spring 1994 with WWW1 held at CERN near geneva, switzerland. in fall 1994, there was a second conference in chicago, USA. because they stated that there will be two conferences each year, one in europe and one in the US, i did not attend WWW2. but at WWW3 in darmstadt, germany, they announced that in the future, there will be only one conference per year. i managed to convince my boss, that i should attend WWW4 in boston, even if i was already in darmstadt and from then on, i did attend every Web conference up to today. the table below lists all conferences and provides links to my trip reports as well as links to the official conference website where applicable.

| no | logo (link to my trip report) |

year | conference (link to official website) |

location | country | number of attendees* |

|---|---|---|---|---|---|---|

| 1 | 1994 | WWW1 | geneva | switzerland (CH) | 380 | |

| 2 | 1994 | WWW2 | chicago | USA | 750 | |

| 3 | 1995 | WWW3 | darmstadt | germany (D) | 1075 | |

| 4 | 1995 | WWW4 | boston | USA | 2000 | |

| 5 | 1996 | WWW5 | paris | france (F) | 1452 | |

| 6 | 1997 | WWW6 | santa clara | USA | 2000 | |

| 7 | 1998 | WWW7 | brisbane | australia (AUS) | 1100 | |

| 8 | 1999 | WWW8 | toronto | canada (CAN) | 1200 | |

| 9 | 2000 | WWW9 | amsterdam | netherlands (NL) | 1400 | |

| 10 | 2001 | WWW10 | hongkong | hongkong (HK) | 1220 | |

| 11 | 2002 | WWW2002 | honolulu | USA | 900 | |

| 12 | 2003 | WWW2003 | budapest | hungary (H) | 850 | |

| 13 | 2004 | WWW2004 | new york | USA | 1000 | |

| 14 | 2005 | WWW2005 | chiba | japan (J) | 900 | |

| 15 | 2006 | WWW2006 | edinburgh | scotland (UK) | 1124 | |

| 16 | 2007 | WWW2007 | banff | canada (CA) | 940 | |

| 17 | 2008 | WWW2008 | beijing | china (CN) | 875 | |

| 18 | 2009 | WWW2009 | madrid | spain (ES) | 1000 |

| *) note: | it is very difficult to get an accurate value for the number of attendees. the numbers are either based on the printed list of attendees where available, on the statement made by the organizers or on my own observations. the number for WWW2 is just an estimation because i missed that conference and i didn't find any numbers on the Web. |

| this trip report was written on an ASUS Eee PC running Windows 7 Ultimate using Softquad HoTMetaL. this document is supposed to be compliant to HTML V4.0 strict. |